This introduces its own problems - the technological analogs of autoimmune diseases (the anti-AI AIs sometimes mistakenly think of humans as AIs) or of leukemia (the anti-AI AIs reproduce out of control). But mutation in AIs is certain - are they recursively self-improving, or not? - so I'd rather have the logic favor us from the start, than assume we can make them generally friendly. (This is one approach being explored by the Singularity Institute currently.) It's not clear how hacking would hold the singularity in check; malaria might have slowed the industrial revolution, but it didn't stop it, and post-singularity, you and I are Plasmodium.

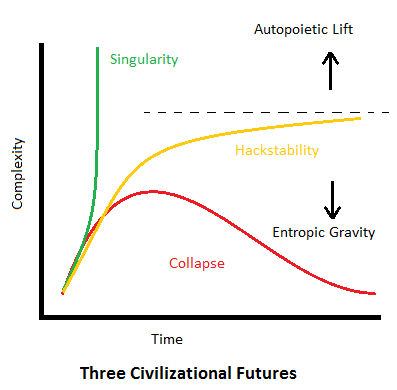

Of Rao's three options, note that singularity is not necessarily different in the long run from collapse. Evolution is non-teleologic, and life on Earth has had damaging non-equilibrium periods. Before they occurred it would not have been obvious that they would not result in the end of life on Earth, and it is not obvious that an event like a technological singularity, no matter how smart it might be, will not result in not only our extinction as we currently understand our species, but its own. A global proterozoic cyanobacteria bloom poisoning themselves and remaking the atmosphere will seem Liliputian, to a future alien scientist looking at Earth's strata, compared to a singularity as usually conceived.

"But the singularity will produce superintelligent entities to which we will be as worms!" you protest. Yes, but granting this, there must be limits to what intelligence can do. Even with this concept in ultimate extension, we have to accept that computation requires discrete elements, of which there are a fixed number of combinations in our finite universe. There will at least be a limit. And intelligence isn't magic; if you're at the bottom of a sheer pit with both a worm and an AI that's in the same kind of body as you, and it's about to fill with boiling oil, you're all screwed, and it doesn't matter how smart the AI is. (Incidentally these limits imply that P does not equal NP, in any real sense that is ever possible in the universe, never mind the formalism.) If you're protesting that the singularity overwhelms the fact of an eventual fundamental limitation on computation and all physical laws are suspended, then you're arguing that superintelligence is the same as magic, and if you believe that you should also believe (as I would) that we should stop wasting our time talking about it.

As I've observed before, if we believe that we can't possibly understand any post-singularity events, this presents epistemological problems closer to home in the singularity's genesis. If we can't understand it, we can't even argue that it hasn't already happened! Note that this statement is not an argument against the possibility of a singularity or coherence of the idea, but it does present problems of what and how we can know about such a thing.

Rao doesn't argue strenuously for a "cap" to AI but if we believe a thing like the singularity is possible, then we should do everything we can to stop it.

No comments:

Post a Comment